Senior Design Projects

TSU Animatronic Tiger

Tenessee State University mascot animal, blue tiger, is become an animatronic robot...

GESTURE BASED HUMAN-ROBOT INTERACTION

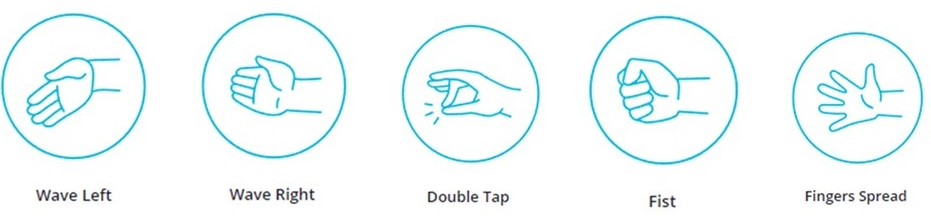

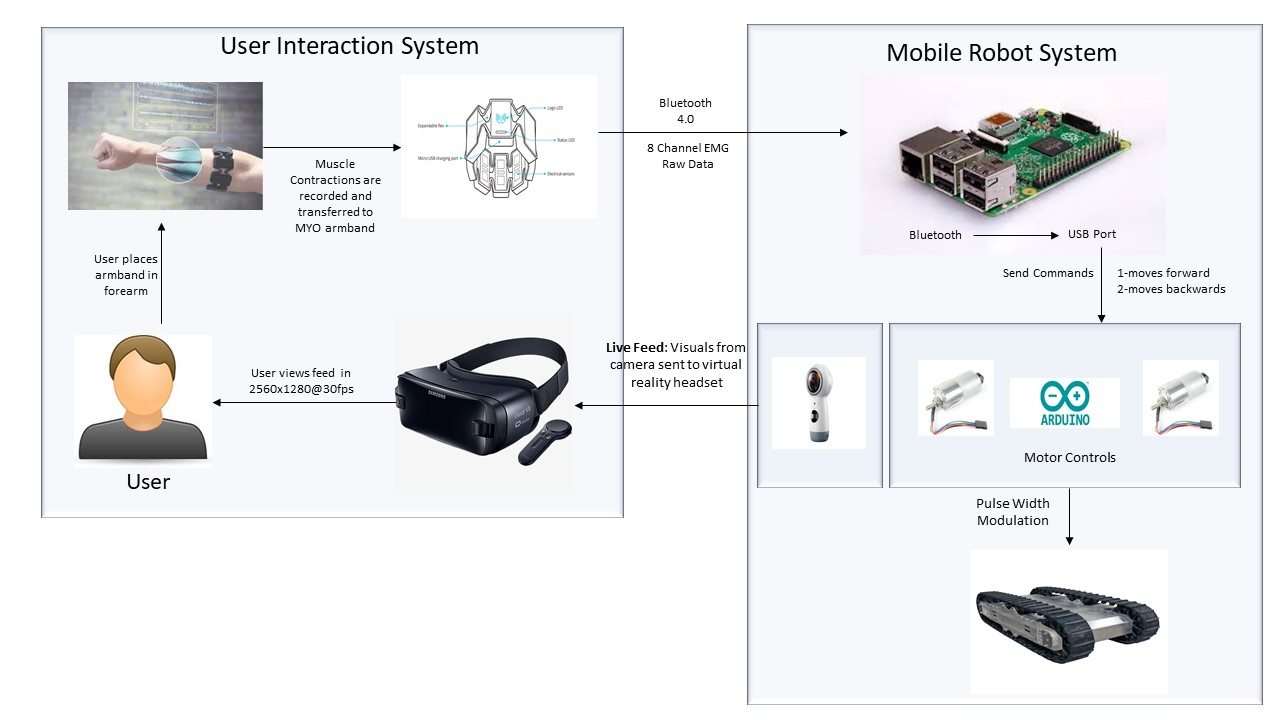

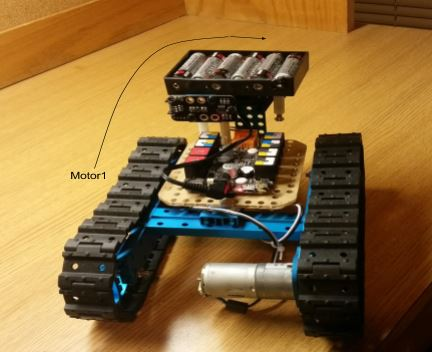

Throughout the past few years, society has tried to overcome the boundaries of what is possible with human-robot interactions. Some of these boundaries can be broken by means of physical ability. In this project, we will accomplish establishing an interaction between humans and robots using an armband that receives electromyography signals from the contraction of muscles. This will allow us to have complete control of a robot while also being aware of our surroundings and environment. By breaking this boundary, our work will be more proficient and effective on a daily basis. In today’s world, robots are steadily becoming an essential and necessary part of our everyday lives. They perform a variety of tasks ranging from displaying time on a smartwatch to assembling different pieces of a product together to sending objects to space. There are times when robots perform as commanded; however, there are times when they do not perform as commanded and may malfunction causing a halt in productivity. As a solution to this problem, the interaction of humans and robots becomes essential. When this type of problem occurs, human-robot interaction is necessary to satisfy its desired objectives. As the use of robotics become more acceptable and essential in todays society, we can increase safety and productivity in the workplace. These robots can be controlled remotely. However, with most of these robots being controlled by remote, observability of our environment and surroundings can be greatly restricted due to the operator primarily focusing on the controlling the robot by a remote-control device. It is important and necessary for an operator to be completely aware of his/her surroundings at all times. In this project, a wearable device placed on the forearm will receive bio signals from the contractions of arm muscles to interact with mobile robotics. >>>

SMART SURVEILLANCE ROBOT

For our project, we have developed a software system for a mobile robot that acts as a surveillance system that is able to make security decisions based off of context awareness. The system is mobile, meaning that the user is be able to move the robot to any position or location he or she chooses. The robot can only be operated by one user at a time. First, the surveillance system take pictures every five seconds using a Logitech Camera that is connected to the Raspberry Pi (B3 Model). The pictures are taken in a continuous loop and saved to a file. The pictures are then sent to Clarifai API which is an Image Recognition System that analyze the pictures that are sent from the saved file. In return, Clarifai sends back tags to the Raspberry Pi which then leads to the decision making phase. In the database, there are a list of warning keywords that describes threat(s) and/or suspicious behavior. If any of the tags used to describe the images match the warning keywords in our database, the program sends an alert email to the user along with a message letting the user know that something suspicious is taking place. All of the codes for this project are implemented in Java using Pycharm. >>>

Player Too

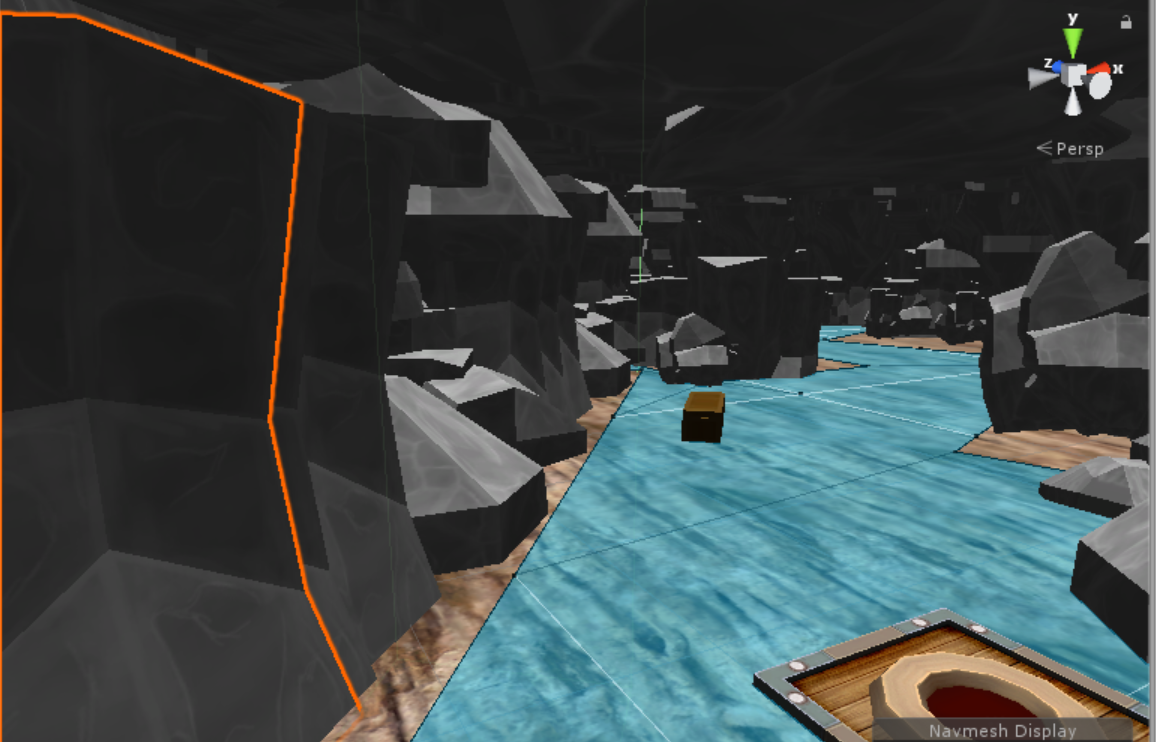

Many games now days follow a few simple rules. One main character the player controllers involving himself in a story about him in either a first or third person perspective. Our goal with this project was to learn the ins and outs of making games using unity and other programs. The goal of the game however was to establish a unique player experience in which you do not control the main character and instead experience the world in a second person perspective. This project will use unity in order to develop a video game world in which artificial intelli- gence controls the characters and you play a secondary role. This is called a 2nd person perspec- tive. Some games have utilized this perspective in the past but its not a very popular option. Most games use the first or third person perspective. First person is the perspective from the eyes of the character you control while third person is the perspective usually over the shoulder of the char- acter you control. 2nd person perspective is when you observe or control the primary character from the perspective of a separate character. The most popular example of a game in 2nd person perspective is super mario 64 where you control mario but the camera is actually the first person perspective of a lakitu, one of the enemies in the series. Unity will make applying this 2nd person perspective pretty easy. Unity allows you to move and place things in a 3d environment so you can test and see how things will look when the game is ran while you’re making it. You can also move the camera for the perspective of the game, the camera can be placed literally anywhere and can be programmed to move along with any object. Unity also provides assets through an asset store so we wont have to worry about modeling just design and programming. Unity allows quick and easy start up for projects like this and is easily the best choice of engine for people who dont have a large company capable of creating its own. >>>

Project MITTENS

The Motion Integrated Technological Turntable Environment Nexus Simulator, or MITTENS for short, is a project geared towards giving the user the freedom to manipulate an object in a virtual space with his/her own hands using realistic hand gestures and motions. The manipulation of virtual items is not a new endeavor in the digital technology industry. Many video game companies have utilized some form of motion controls into their new hardware. A prime example would be Nintendo; they have been known as the pioneers of the motion controls in the video game industry since the release of the Nintendo Wii in 2006. The Nintendo Wii uses what is called a Wiimote as a primary controller that enacts all of the features that include the usage of motion controls. There is a sensor located at the top of the Wiimote with the added feature of sensing accel- eration and infrared light from a sensor bar placed either above or below a TV screen. Cooking Mama, a game released on the Wii, came close to implementing realistic motion controls to every- day tasks. Through a combination of motion-sensing and timed button presses the player is able to closely mimic actions ranging from peeling potatoes and onions to sauting food on a hot skillet. The only issue with this form of motion control is that it is limited by the buttons available on the controller as well as its ability to sense the light from the sensor bar or another infrared light source.Our project aims to forego the usage of a controller that relies infrared light sensing for a more practical method of detecting motion (i.e through a built in camera) using a software known as Open CV (Open Source Computer Vision Library). Encompassed into Open CV are hundreds of algorithms that include but are not limited to facial recognition, identify objects, track movements such as hand motions and eye movement, and image comparison. A peripheral created by Mi- crosoft known as the Kinect bears a resemblance to what we have in mind for the project uses a camera that uses an infrared grid to calculate depth. The Kinect is operated by the body of the user provided he or she is within sight of the camera in the device. >>>